Mining Worse and Better Opinions:

Unsupervised and Agnostic Aggregation of Online Reviews

Michela Fazzolari, Marinella Petrocchi, Alessandro Tommasi, Cesare Zavattari

This webpage contains additional materia, which, for pages limit, has not been included into the research paper submitted to the Intl. Conference on Web Engineering (ICWE 2017). In particular, we report here abstract and structure of the paper, filling the sections with material that does not appear in the submitted paper.

Abstract

In this paper, we propose a novel approach for aggregating on-line reviews, according to the opinions they express. Our methodology is unsupervised - due to the fact that it does not rely on pre-labeled reviews - and it is agnostic - since it does not make any assumption about the domain or the language of the review content. We do not adopt opinion mining techniques; rather, we propose a novel metric, measuring the adherence of the review content to the domain terminology extracted from the reviews set. First, we demonstrate the informativeness of the adherence metric with respect to the score associated with a review. Then, we successfully apply this novel approach to group reviews, according to the opinions they express. Our extensive experimental campaign has been carried out on two large datasets of hotel reviews and products reviews, collected from Booking and Amazon, respectively.

Summary

- Introduction

- Review Adherence to Typical Terminology

- Extracting the Terminology

- Adherence Definition

- Datasets

- Experiments and Results

- Adherence Informativeness

- Good Opinions, Higher Adherence

- Extension to Different Languages

- Language-Agnostic Reviews Clustering

- Representative Terms in First and Last Bins

- Related Work

- Final Remarks

1. Introduction

In this paper, we propose an original approach to aggregating reviews with similar opinions. The proposed approach is unsupervised, since it does not rely on labelled reviews and training phases. Moreover, it is agnostic, needing no previous knowledge on either the reviews domain or language. Grouping of reviews is obtained by relying on a novel introduced metric, called adherence, which mea sures how much a review text inherits from a reference terminology, automatically extracted from an unannotated reviews corpus. Leveraging an extensive experimental campaign over two large reviews datasets, in different languages, from Booking and Amazon, we first demonstrate that the value of the adherence metric is informative, since it is correlated with the review score. Then, we exploit adherence to aggregate reviews according to the reviews positiveness. A further analysis on such groups highlights the most characteristic terms therein. This leads to the additional result of learning the best and worst features of a product.

2. Review Adherence to Typical Terminology

In this section, we define the adherence metric. Adherence measures how much one review adheres to the reference terminolog extracted from a review set. All the material for this section is in the submitted paper.3. Datasets

We consider two large datasets, composed of reviews from Booking and Amazon. All the material for this section is in the submitted paper.4. Experiments and Results

This section describes the experiments and their results.4.1 Adherence Informativeness

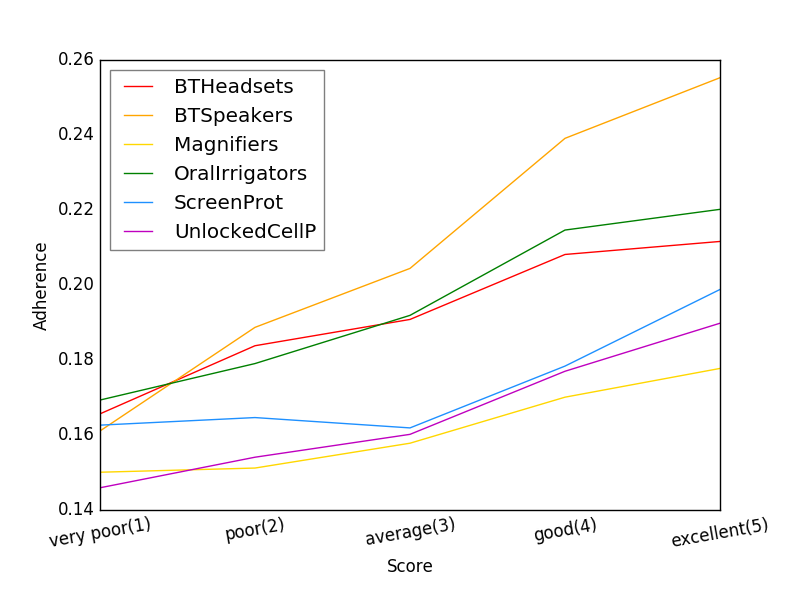

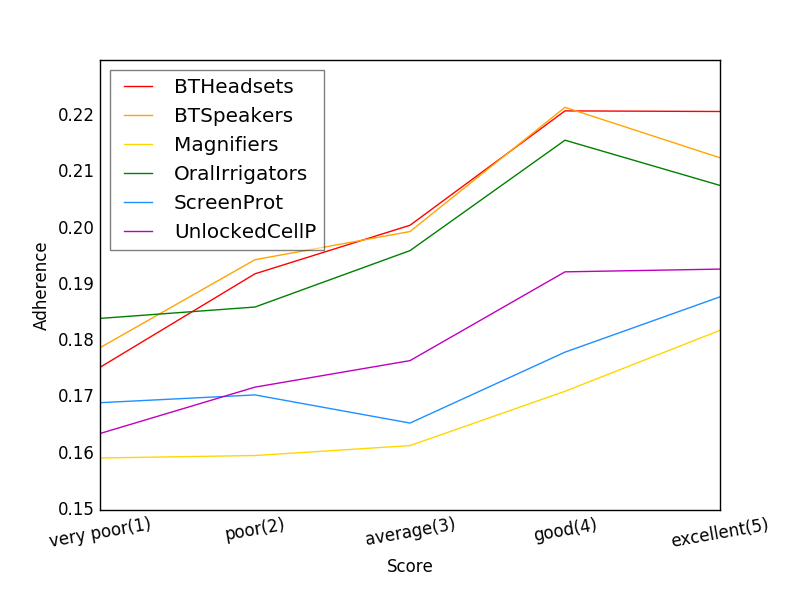

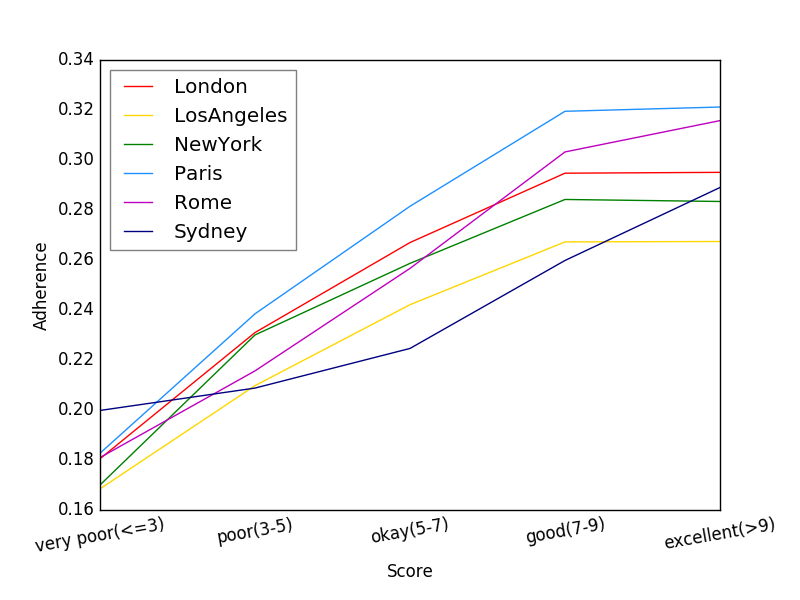

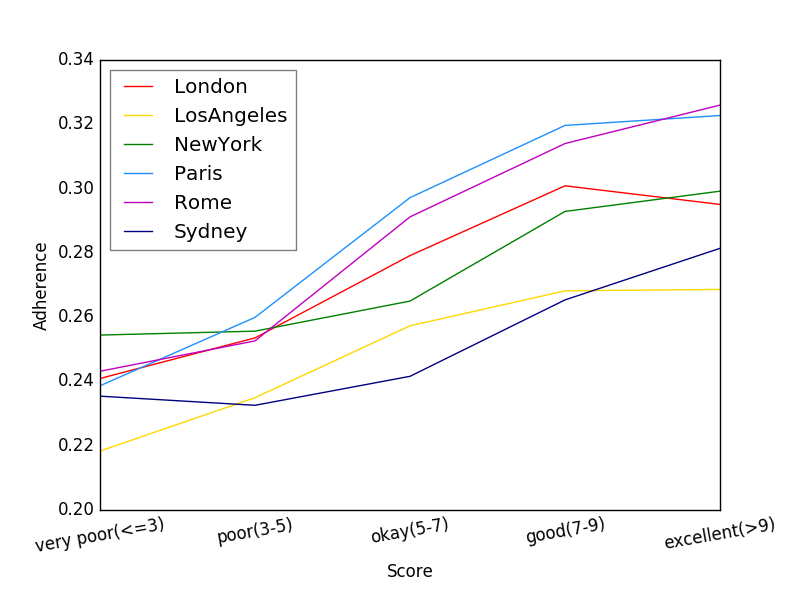

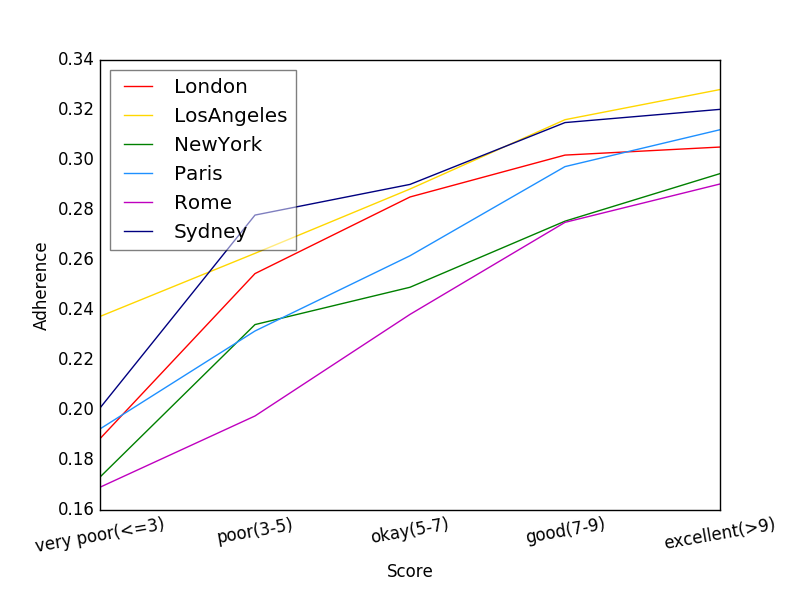

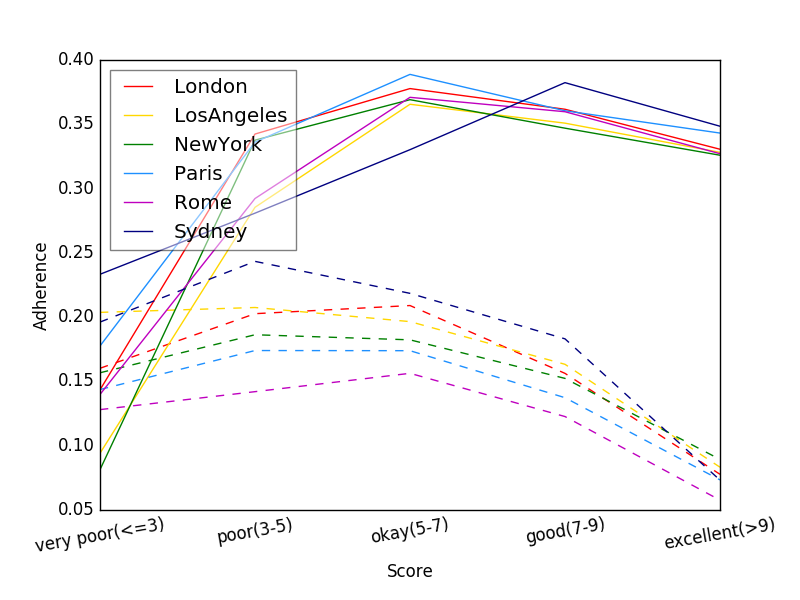

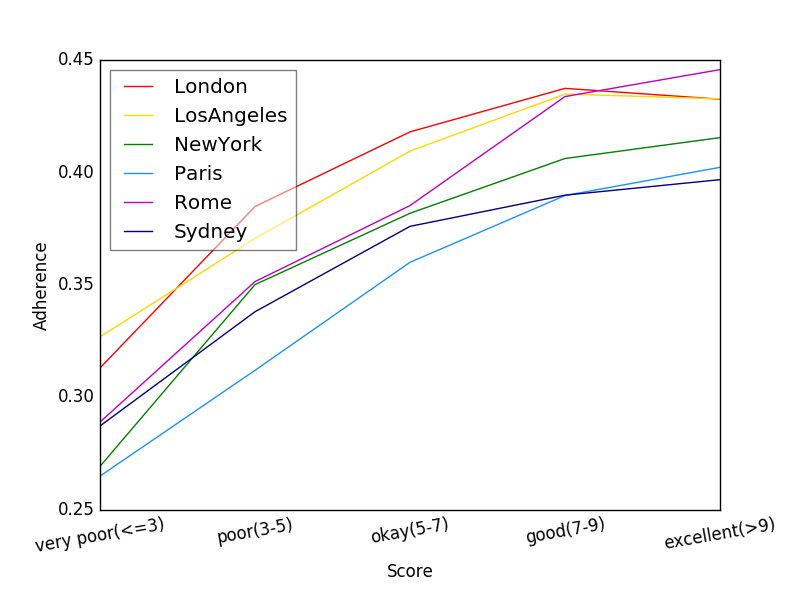

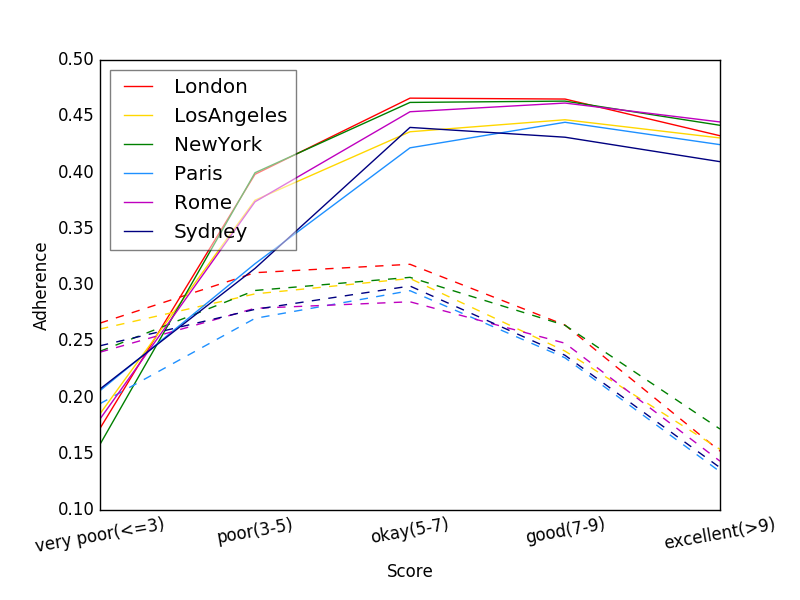

First, we show the correlation between adherence values and reviews scores. Here, we report the graphs plotting the adherence vs the scores, both for Booking and for Amazon, for the balanced and the unbalanced case. Note: in the submitted paper, we reported the case for the balanced datasets only.

Note that, working on balanced bins, the typical terminology of the reviews corpus has been recalculated, with respect to what computed for the original, unbalanced dataset. Thus, even considering the less populated bin, the average adherence value for the balanced dataset is not the same as the one in the unbalanced dataset for the same bin (this holds both for Booking and Amazon datasets, it can be appreciated from the related figures). Also note that the under-sampling applied to the majority classes to balance the reviews number leads to a deterioration of the results (both for Amazon and Booking - see the differences between the balanced and unbalanced graphs).

4.2 Good Opinions, Higher Adherence

These results enrich the ones reported in the related section of the submitted paper.

The following table shows the average adherence and the average standard deviation for six Amazon categories, when considering the unbalanced dataset.

| Amazon unbalanced | Bluetooth Headsets | Bluetooth Speakers | Magnifiers | Oral Irrigators | Screen Protectors | Unlocked Cell Phones | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Score bin | avg | sth dev | avg | sth dev | avg | sth dev | avg | sth dev | avg | sth dev | avg |

sth dev |

| 1 | 0.17 | 0.07 | 0.16 | 0.08 | 0.15 | 0.08 | 0.17 | 0.07 | 0.16 | 0.07 | 0.15 | 0.06 |

| 2 | 0.18 | 0.07 | 0.19 | 0.07 | 0.15 | 0.07 | 0.18 | 0.07 | 0.16 | 0.07 | 0.15 | 0.06 |

| 3 | 0.19 | 0.07 | 0.20 | 0.08 | 0.16 | 0.08 | 0.19 | 0.07 | 0.16 | 0.07 | 0.16 | 0.07 |

| 4 | 0.21 | 0.08 | 0.24 | 0.09 | 0.17 | 0.08 | 0.21 | 0.08 | 0.18 | 0.08 | 0.18 | 0.08 |

| 5 | 0.21 | 0.09 | 0.26 | 0.11 | 0.18 | 0.09 | 0.22 | 0.08 | 0.20 | 0.09 | 0.19 | 0.10 |

The following table shows the average adherence and the average standard deviation for six Amazon categories, when considering the balanced dataset.

| Amazon balanced | Bluetooth Headsets | Bluetooth Speakers | Magnifiers | Oral Irrigators | Screen Protectors | Unlocked Cell Phones | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Score bin | avg | sth dev | avg | sth dev | avg | sth dev | avg | sth dev | avg | sth dev | avg |

sth dev |

| 1 | 0.17 | 0.07 | 0.18 | 0.08 | 0.16 | 0.08 | 0.18 | 0.07 | 0.17 | 0.07 | 0.15 | 0.06 |

| 2 | 0.19 | 0.07 | 0.20 | 0.07 | 0.16 | 0.08 | 0.19 | 0.06 | 0.17 | 0.07 | 0.16 | 0.06 |

| 3 | 0.19 | 0.07 | 0.21 | 0.08 | 0.16 | 0.08 | 0.20 | 0.07 | 0.16 | 0.07 | 0.16 | 0.07 |

| 4 | 0.21 | 0.08 | 0.23 | 0.08 | 0.17 | 0.08 | 0.22 | 0.08 | 0.18 | 0.08 | 0.18 | 0.08 |

| 5 | 0.21 | 0.09 | 0.23 | 0.09 | 0.18 | 0.09 | 0.21 | 0.08 | 0.19 | 0.09 | 0.18 | 0.09 |

The following table shows the average adherence and the average standard deviation for six Booking cities, when considering the unbalanced dataset.

| Booking unbalanced | London | Los Angeles | New York | Paris | Rome | Sydney | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Score bin | avg | sth dev | avg | sth dev | avg | sth dev | avg | sth dev | avg | sth dev | avg |

sth dev |

| 1 | 0.18 | 0.15 | 0.17 | 0.13 | 0.17 | 0.15 | 0.18 | 0.14 | 0.18 | 0.14 | 0.20 | 0.16 |

| 2 | 0.23 | 0.16 | 0.21 | 0.15 | 0.23 | 0.16 | 0.24 | 0.17 | 0.22 | 0.15 | 0.21 | 0.15 |

| 3 | 0.27 | 0.17 | 0.24 | 0.16 | 0.26 | 0.17 | 0.28 | 0.18 | 0.26 | 0.17 | 0.22 | 0.15 |

| 4 | 0.29 | 0.19 | 0.27 | 0.17 | 0.28 | 0.18 | 0.32 | 0.19 | 0.30 | 0.18 | 0.26 | 0.18 |

| 5 | 0.30 | 0.19 | 0.27 | 0.18 | 0.28 | 0.18 | 0.32 | 0.19 | 0.32 | 0.18 | 0.29 | 0.19 |

The following table shows the average adherence and the average standard deviation for six Booking cities, when considering the balanced dataset.

| Booking balanced | London | Los Angeles | New York | Paris | Rome | Sydney | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Score bin | avg | sth dev | avg | sth dev | avg | sth dev | avg | sth dev | avg | sth dev | avg |

sth dev |

| 1 | 0.24 | 0.18 | 0.22 | 0.15 | 0.25 | 0.22 | 0.24 | 0.18 | 0.24 | 0.17 | 0.27 | 0.23 |

| 2 | 0.25 | 0.16 | 0.23 | 0.16 | 0.25 | 0.17 | 0.26 | 0.18 | 0.26 | 0.17 | 0.25 | 0.16 |

| 3 | 0.27 | 0.17 | 0.25 | 0.16 | 0.27 | 0.17 | 0.29 | 0.18 | 0.29 | 0.16 | 0.23 | 0.14 |

| 4 | 0.30 | 0.19 | 0.27 | 0.17 | 0.29 | 0.19 | 0.32 | 0.20 | 0.31 | 0.18 | 0.28 | 0.18 |

| 5 | 0.30 | 0.20 | 0.28 | 0.19 | 0.29 | 0.19 | 0.31 | 0.18 | 0.32 | 0.19 | 0.30 | 0.21 |

As shown in the tables, the standard deviation within each bin is quite high. This suggests that, even correlated with the score, adherence is not a good measure when considering a single review. Indeed, its informativeness should be rather exploited by considering an ensemble of reviews, as shown in the rest of the submitted paper.

4.3 Extension to Different Languages

Here, we plot the score vs adherence values, for the Booking dataset, considering reviews in Italian and in French. For the graphs where we consider distinct positive ane negative contents, dashed lines correposnd to negative ones. This is completely new material, not shown in the submitted paper, due to pages limit.

4.4 Language-Agnostic Reviews Clustering

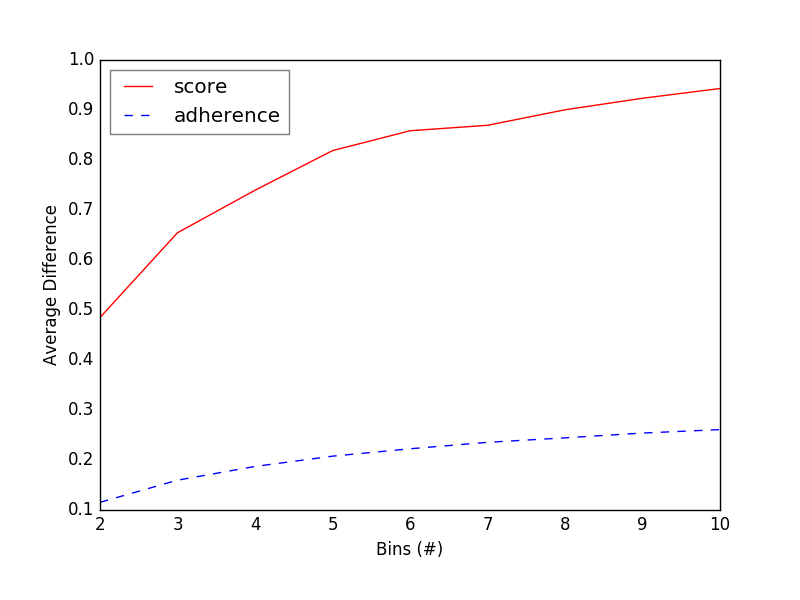

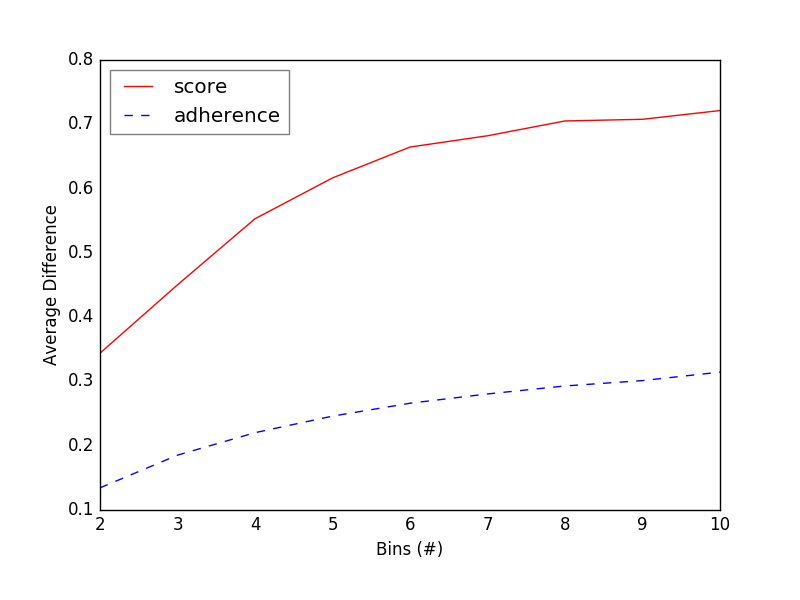

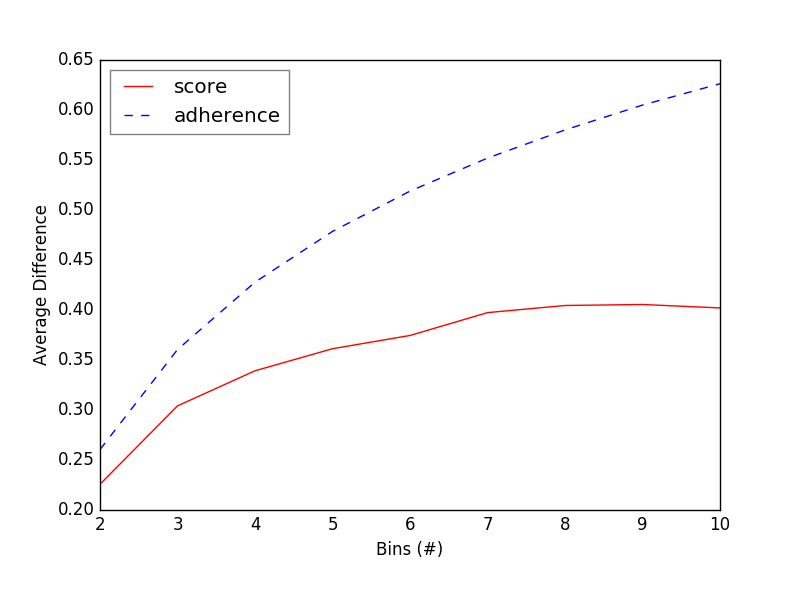

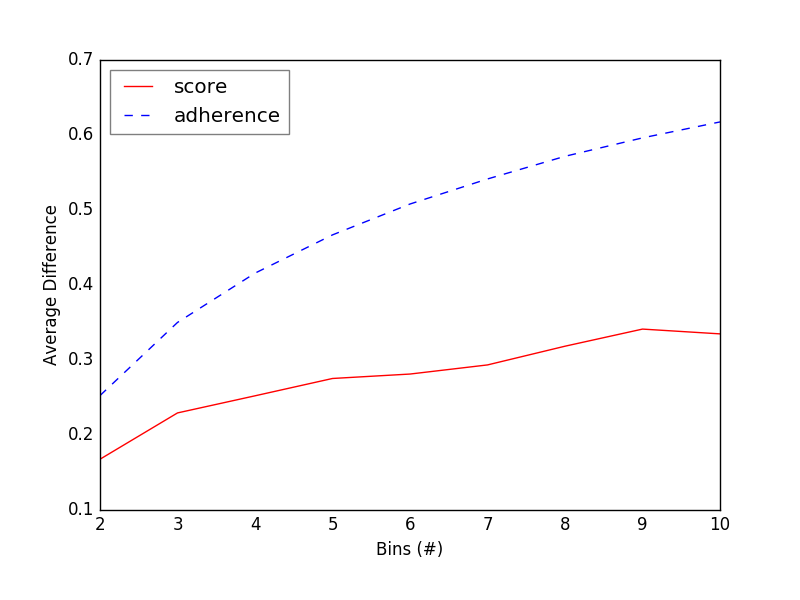

Here, some additional material to Section 4.4 of the submitted paper. The figures below show the values of the average differences between the average adherences of the first and last bins and the average differences between the average scores for the first and last bins, for items belonging to both the Amazon and Booking categories. In each graph, the x-axis reports the number of bins considered, wheres the y-axis represents the average differences values. We depicted the average differences for the adherence with a solid red line, while the average differences for the score with a dashed blue line. The graphs clearly show that, when the number of bin increases, the first and last bin include reviews which describe the product in a considerably different way, in term of positiveness.

4.5 Representative Terms in First and Last Bins

Here, we extend Section 4.5 of the submitted paper, reporting the most relevant terms that appear in the first and last bins of reviews, as formed in Section 4.4. For ``most relevant", we intend 1) most frequent, and 2) belonging to the relevant terminology, and 3) not overlapping.

The following table shows the most relevant terms extracted for two Amazon products, from the reviews that, on average, speak worse and better of the products themselves.

| Product | Negative Score | Terms in the first bin (reviews with lower adherence) | Positive Score | Terms in the last bin (reviews with higher adherence) |

|---|---|---|---|---|

| B005XA0DNQ | 2.9 | refund, packaging, casing, disconnected, gift, battery, packaged, addition, hooked, plugging, shipping, hook, speaker, purpose, sounds, kitchen | 4.3 | compact, sound, great, retractable, portable, very, price, unbelievable, satisfied, product, easy, recommend, small, perfect, little, handy, size |

| B0083RXA86 | 2.4 | stereo, impressive, mostly, charger, button, product, charging, switch, louder, useless, price, usb | 4.6 | blue, charge, useful, very, coating, satisfied, quite, battery, enough, music, attachment, excellent, highly, quality, pleased |

Similar as above, but over the five Amazon categories with more reviews. The table shows the most relevant terms extracted from reviews in the first and last bin (which, in their turn, features lower and higher average adherence)

| Product | The most relevant terms in bin with the lowest adherence | The most relevant terms in bin with the highest adherence |

|---|---|---|

| Bluetooth Headsets | charge, item, amazon, hear, purchased, device, problem, bought | fit, clear, recommend, highly, music, easy, excellent, comfortable, quality |

| Bluetooth Speakers | reviews, speakers, gift, charge, volume, item, amazon, music, purchased, charging, bought | little, portable, excellent, bluetooth, easy, recommend, small, sounds, highly, quality, size |

| Screen Protectors | purchase, cover, bought, item, iphone, pack, instructions | perfect, samsung, clear, highly, fits, apply, perfectly |

| Unlocked Cell Phones | buy, phone, phones, seller, cell, everything, amazon, iphone, item, problem, bought | perfect, excellent, fast, card, easy, recommend, android, quality, sim |

| Appetite Control | try, tried, these, hungry, pills, reviews, products, oz, bottle, waste, eat, bought | loss, cambogia, lost, pounds, garcinia, recommend, diet, highly, definitely, lose, extract, exercise |

The following table shows the most relevant terms extracted for some Booking categories, for English, Italian and French. However, for Italian and French, few examples are shown, due to the low number of reviews for such datasets.

| City | The most relevant terms in bin with the lowest adherence | The most relevant terms in bin with the highest adherence |

|---|---|---|

| London | booked, bar, floor, wifi, stayed, stay, shower, receptionist, reception, booking, hotels | helpful, cleanliness, convenient, quiet, comfortable, beds, facilities, clean, excellent, friendly, size |

| New York | booked, square, checked, floor, door, stayed, stay, reception, desk, reservation, booking, hotels | perfect, bathroom, helpful, cleanliness, comfortable, beds, clean, excellent, small, noisy, friendly, size |

| Rome | albergo, prenotazione, servizi, stanze, hotel, struttura, soggiorno, reception, doccia, booking | disponibile, cortesia, gentile, confortevole, termini, ottima, gentilezza, abbondante, pulita, cordiale |

| Paris | emplacement, gare, accueil, anglais, chambres, hotel, clients, avons, sol, londres, wifi, lit, chambre, réservation, hôtel, réception, payé, bruit, booking | salle, géographique, déjeuner, proche, confortable, petite, petit, qualité, quartier, très, métro, calme, propreté, literie, situation, propre, agréable, proximité, bain |